Pick-and-Place Workflow in Gazebo Using ROS

This example shows how to setup an end-to-end pick-and-place workflow for a robotic manipulator like the Kinova® Gen3 and simulate the robot in the Gazebo physics simulator.

Overview

This example identifies and recycles objects into two bins using a Kinova Gen3 manipulator. The example uses tools from five toolboxes:

Robotics System Toolbox™ is used to model and simulate the manipulator.

Stateflow® is used to schedule the high-level tasks in the example and step from task to task.

ROS Toolbox is used for connecting MATLAB® to Gazebo.

Computer Vision Toolbox™ and Deep Learning Toolbox™ are used for object detection using simulated camera in Gazebo.

This example builds on key concepts from the following related examples:

Plan and Execute Task- and Joint-Space Trajectories Using Kinova Gen3 Manipulator shows how to generate and simulate interpolated joint trajectories to move from an initial to a desired end-effector pose.

Computer Vision Toolbox example: Train YOLO v2 Network for Vehicle Detection (Computer Vision Toolbox)

ROS Toolbox example: Get Started with Gazebo and Simulated TurtleBot (ROS Toolbox)

Robot Simulation and Control in Gazebo

Start a ROS-based simulator for a Kinova Gen3 robot and configure the MATLAB® connection with the robot simulator.

This example uses a virtual machine (VM) containing ROS Melodic available for download here. If you have never used it before, see Get Started with Gazebo and Simulated TurtleBot (ROS Toolbox).

In VM settings, VM > Settings > Hardware > Display, disable Accelerate 3D graphics.

Start the Ubuntu® virtual machine desktop.

In the Ubuntu desktop, click the Gazebo Recycling World icon to start the Gazebo world built for this example. Click the Play button to run the Gazebo world.

Specify the IP address and port number of the ROS master in Gazebo so that MATLAB® can communicate with the robot simulator. For this example, the ROS master in Gazebo uses the IP address of

192.168.203.131displayed on the Desktop. Adjust therosIPvariable based on your VM.Start the ROS 1 network using

rosinit.

rosIP = '192.168.203.131'; % IP address of ROS-enabled machine rosinit(rosIP,11311); % Initialize ROS connection

The value of the ROS_IP environment variable, 192.168.31.1, will be used to set the advertised address for the ROS node. Initializing global node /matlab_global_node_36570 with NodeURI http://192.168.31.1:51073/

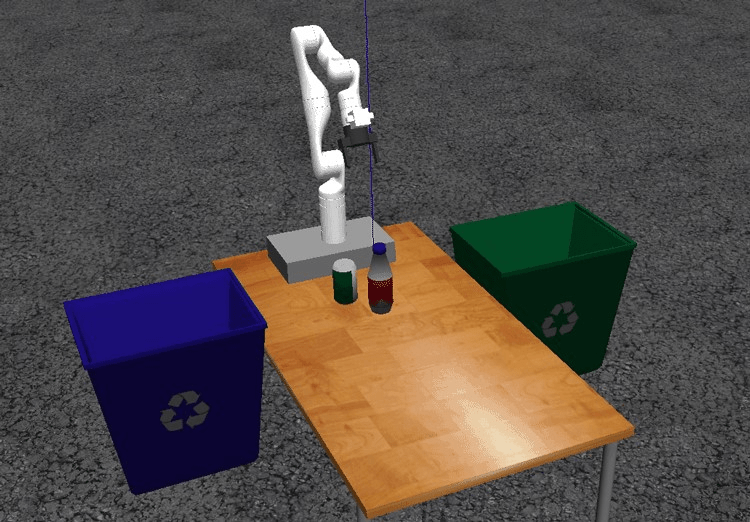

Once Gazebo world starts running, the VM loads a Kinova Gen3 Robot arm on a table with one recycling bin on each side. To simulate and control the robot arm in Gazebo, the VM contains the ros_kortex ROS package, which are provided by Kinova.

The packages use ros_control to control the joints to desired joint positions. For additional details on using the VM, refer to Get Started with Gazebo and Simulated TurtleBot (ROS Toolbox)

Stateflow Chart

This example uses a Stateflow chart to schedule tasks in the example. Open the chart to examine the contents and follow state transitions during chart execution.

edit exampleHelperFlowChartPickPlaceROSGazebo.sfxThe chart dictates how the manipulator interacts with the objects, or parts. It consists of basic initialization steps, followed by two main sections:

Identify Parts and Determine Where to Place Them

Execute Pick-and-Place Workflow

For a high-level description of the pick-and-place steps, see Pick-and-Place Workflow Using Stateflow for MATLAB.

Opening and closing the gripper

The command for activating the gripper, exampleCommandActivateGripperROSGazebo, sends an action request to open and close the gripper implemented in Gazebo. To send a request to open the gripper, the following code is used. Note: The example code shown just illustrates what the command does. Do not run.

[gripAct,gripGoal] = rosactionclient('/my_gen3/custom_gripper_controller/gripper_cmd'); gripperCommand = rosmessage('control_msgs/GripperCommand'); gripperCommand.Position = 0.0; gripGoal.Command = gripperCommand; sendGoal(gripAct,gripGoal);

Moving the Manipulator to a Specified Pose

The exampleCommandMoveToTaskConfigROSGazebo command function is used to move the manipulator to specified poses.

Planning

The path planning generates simple task-space trajectories from an initial to a desired task configuration using trapveltraj and transformtraj. For more details on planning and executing trajectories, see Plan and Execute Task- and Joint-Space Trajectories Using Kinova Gen3 Manipulator.

Joint Trajectory Controller in ROS

After generating a joint trajectory for the robot to follow, exampleCommandMoveToTaskConfigROSGazebo samples the trajectory at the desired sample rate, packages it into joint-trajectory ROS messages and sends an action request to the joint-trajectory controller implemented in the Kinova ROS package.

Detecting and Classifying Objects in the Scene

The functions exampleCommandDetectPartsROSGazebo and exampleCommandClassifyPartsROSGazebo use the simulated end-effector camera feed from the robot and apply a pretrained deep-learning model to detect the recyclable parts. The model takes a camera frame as input and outputs the 2-D location of the object (pixel position) and the type of recycling it requires (blue or green bin). The 2-D location on the image frame is mapped to the robot base frame.

Deep-Learning Model Training: Acquiring and Labeling Gazebo Images

The detection model was trained using a set of images acquired in the simulated environment within the Gazebo world with the two classes of objects (bottle, can) placed on different locations of the table. The images are acquired from the simulated camera on-board the robot, which is moved along the horizontal and vertical planes to get images of the objects from many different camera perspectives.

The images are then labeled using the Image Labeler (Computer Vision Toolbox) app, creating the training dataset for the YOLO v2 detection model. trainYOLOv2ObjectDetector (Computer Vision Toolbox) trains the model. To see how to train a YOLO v2 network in MATLAB, see Train YOLO v2 Network for Vehicle Detection (Computer Vision Toolbox).

The trained model is deployed for online inference on the single image acquired by the on-board camera when the robot is in the home position. The detect (Computer Vision Toolbox) function returns the image position of the bounding boxes of the detected objects, along with their classes, that is then used to find the position of the center of the top part of the objects. Using a simple camera projection approach, assuming the height of the objects is known, the object position is projected into the world and finally used as reference position for picking the object. The class associated with the bounding boxes decides which bin to place the object.

Start the Pick-and-Place Task

This simulation uses a Kinova Gen3 manipulator with a Robotiq gripper attached. Load the robot model from a .mat file as a rigidBodyTree object.

load('exampleHelperKINOVAGen3GripperROSGazebo.mat'); Initialize the Pick-and-Place Coordinator

Set the initial robot configuration. Create the coordinator, which handles the robot control, by giving the robot model, initial configuration, and end-effector name.

initialRobotJConfig = [3.5797 -0.6562 -1.2507 -0.7008 0.7303 -2.0500 -1.9053];

endEffectorFrame = "gripper";Initialize the coordinator by giving the robot model, initial configuration, and end-effector name.

coordinator = exampleHelperCoordinatorPickPlaceROSGazebo(robot,initialRobotJConfig, endEffectorFrame);

Specify the home configuration and two poses for placing objects.

coordinator.HomeRobotTaskConfig = getTransform(robot, initialRobotJConfig, endEffectorFrame);

coordinator.PlacingPose{1} = trvec2tform([[0.2 0.55 0.26]])*axang2tform([0 0 1 pi/2])*axang2tform([0 1 0 pi]);

coordinator.PlacingPose{2} = trvec2tform([[0.2 -0.55 0.26]])*axang2tform([0 0 1 pi/2])*axang2tform([0 1 0 pi]);Run and Visualize the Simulation

Connect the coordinator to the Stateflow Chart. Once started, the Stateflow chart is responsible for continuously going through the states of detecting objects, picking them up and placing them in the correct staging area.

coordinator.FlowChart = exampleHelperFlowChartPickPlaceROSGazebo('coordinator', coordinator); Use a dialog to start the pick-and-place task execution. Click Yes in the dialog to begin the simulation.

answer = questdlg('Do you want to start the pick-and-place job now?', ... 'Start job','Yes','No', 'No'); switch answer case 'Yes' % Trigger event to start Pick and Place in the Stateflow Chart coordinator.FlowChart.startPickPlace; case 'No' coordinator.FlowChart.endPickPlace; delete(coordinator.FlowChart) delete(coordinator); end

Ending the Pick-and-Place Task

The Stateflow chart will finish executing automatically after 3 failed attempts to detect new objects. To end the pick-and-place task prematurely, uncomment and execute the following lines of code or press Ctrl+C in the command window.

% coordinator.FlowChart.endPickPlace; % delete(coordinator.FlowChart); % delete(coordinator);

Observe the Simulation States

During execution, the active states at each point in time are highlighted in blue in the Stateflow chart. This helps keeping track of what the robot does and when. You can click through the subsystems to see the details of the state in action.

Visualize the Pick-and-Place Action in Gazebo

The Gazebo world shows the robot in the working area as it moves parts to the recycling bins. The robot continues working until all parts have been placed. When the detection step doesn't find any more parts four times, the Stateflow chart exits.

if strcmp(answer,'Yes') while coordinator.NumDetectionRuns < 4 % Wait for no parts to be detected. end end

Shutdown the ROS network after finishing the example.

rosshutdown

Shutting down global node /matlab_global_node_36570 with NodeURI http://192.168.31.1:51073/

Copyright 2020 The MathWorks, Inc.