GPU Coder generates optimized CUDA® code from MATLAB code and Simulink models. The generated code includes CUDA kernels for parallelizable parts of your deep learning, embedded vision, and radar and signal processing algorithms. For high performance, the generated code can call NVIDIA® TensorRT®. You can integrate the generated CUDA into your project as source code or static/dynamic libraries and compile it for modern NVIDIA GPUs, including those embedded on NVIDIA Jetson™ and NVIDIA DRIVE™ platforms. You can access peripherals on the Jetson and DRIVE platforms and incorporate manually written CUDA into the generated code.

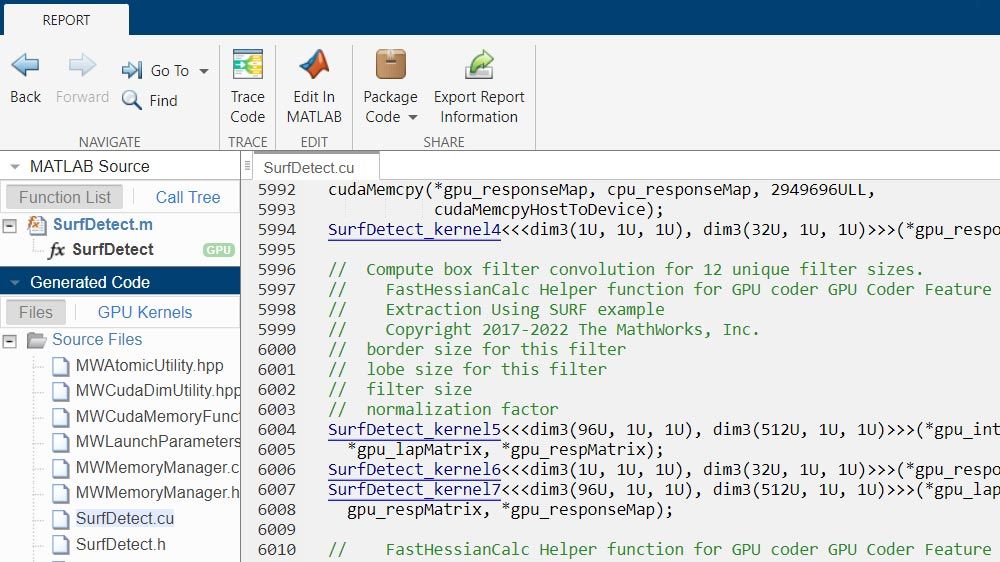

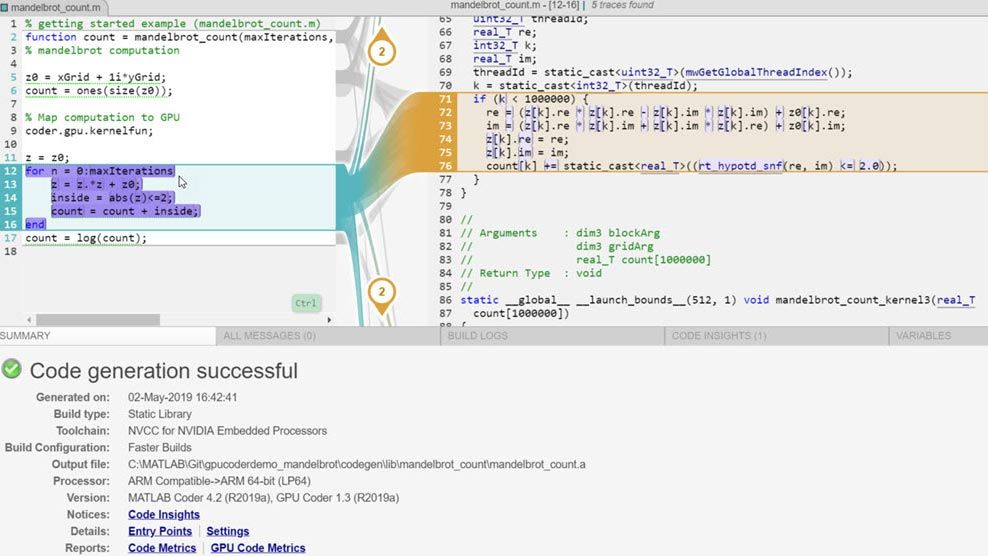

GPU Coder enables you to profile the generated CUDA to identify bottlenecks and opportunities for performance optimization (with Embedded Coder). Bidirectional links let you trace between MATLAB code and generated CUDA. You can verify the numerical behavior of the generated code via software-in-the-loop (SIL) and processor-in-the-loop (PIL) testing.

Generate CUDA Code from MATLAB

Compile and run CUDA code generated from your MATLAB algorithms on popular NVIDIA GPUs, from desktop RTX cards to data centers to embedded Jetson and DRIVE platforms. Deploy the generated code royalty-free to your customers at no charge.

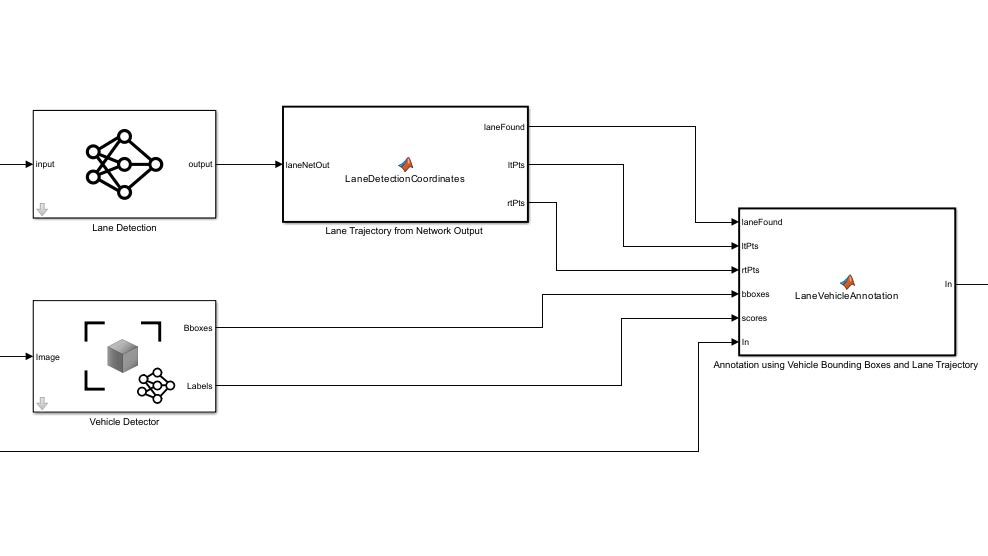

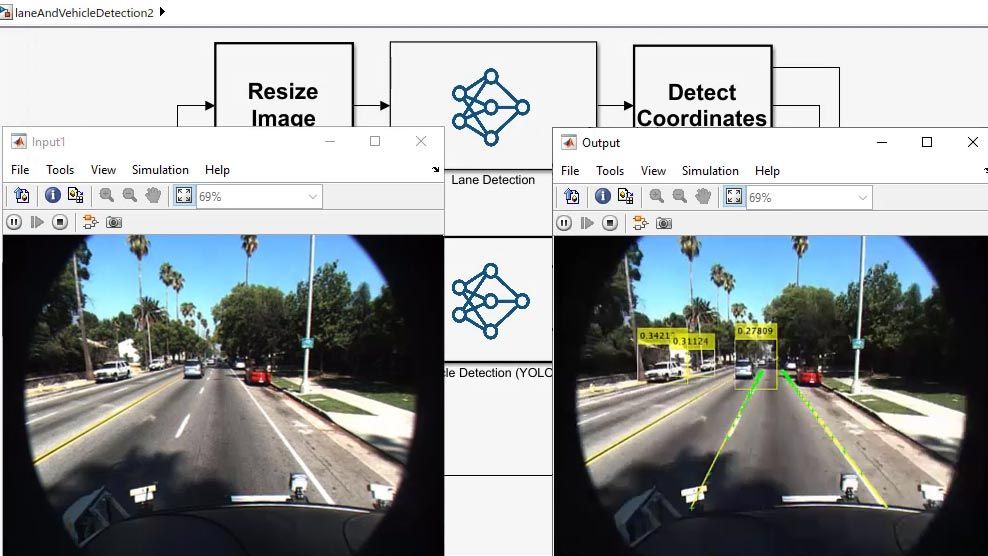

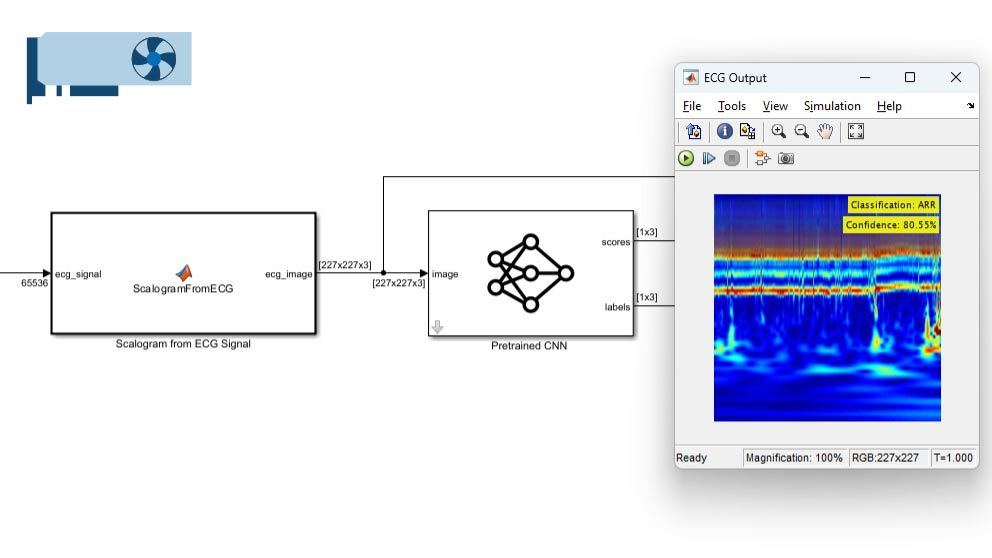

Generate CUDA Code from Simulink

Use Simulink Coder with GPU Coder to generate CUDA code from your Simulink models and deploy it to NVIDIA GPUs. Accelerate compute-intensive portions of Simulink simulations on NVIDIA GPUs.

Deploy to NVIDIA Jetson and DRIVE

GPU Coder automates deployment of generated code onto NVIDIA Jetson and DRIVE platforms. Access peripherals, acquire sensor data, and deploy your algorithm along with peripheral interface code to the board for standalone execution.

Generate Code for Deep Learning

Deploy a variety of predefined or customized deep learning networks to NVIDIA GPUs. Generate code for preprocessing and postprocessing along with your trained deep learning networks to deploy complete algorithms.

Optimize Generated Code

GPU Coder automatically applies optimizations including memory management, kernel fusion, and auto-tuning. Reduce memory footprint by generating INT8 or bfloat16 code. Further boost performance by integrating with TensorRT.

Profile and Analyze Generated Code

Use the GPU Coder Performance Analyzer to profile generated CUDA code and identify opportunities to further improve execution speed and memory footprint.

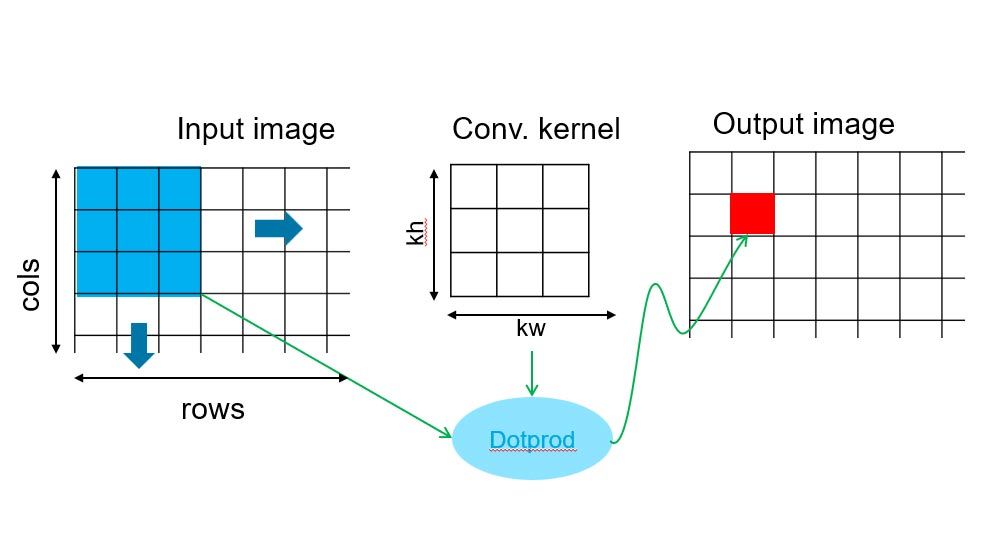

Use Design Patterns to Boost Performance

Design patterns, including stencil processing and reductions, are applied automatically when available to increase the performance of generated code. You can also manually invoke them using specific pragmas.

Log Signals, Tune Parameters, and Verify Code Behavior

Use GPU Coder with Simulink Coder to log signals and tune parameters in real time. Add Embedded Coder to interactively trace between MATLAB and generated CUDA code to numerically verify the behavior of generated CUDA code via SIL testing.

Accelerate MATLAB and Simulink Simulations

Call generated CUDA code as a MEX function from your MATLAB code to speed execution. Use Simulink Coder with GPU Coder to accelerate compute-intensive portions of MATLAB Function blocks in your Simulink models on NVIDIA GPUs.

Product Resources:

“From data annotation to choosing, training, testing, and fine-tuning our deep learning model, MATLAB had all the tools we needed—and GPU Coder enabled us to rapidly deploy to our NVIDIA GPUs even though we had limited GPU experience.”

Valerio Imbriolo, Drass Group